Facial recognition use by South Wales Police 'unlawful'

Police use of facial recognition cameras has been ruled "unlawful" by the Court of Appeal, in what was described as a "major victory" in the fight against the tech.

The ruling, which comes almost a year after the High Court dismissed a case by Ed Bridges over South Wales Police's deployment of the kit, will mean the force will have to halt their long-running trial of facial recognition cameras.

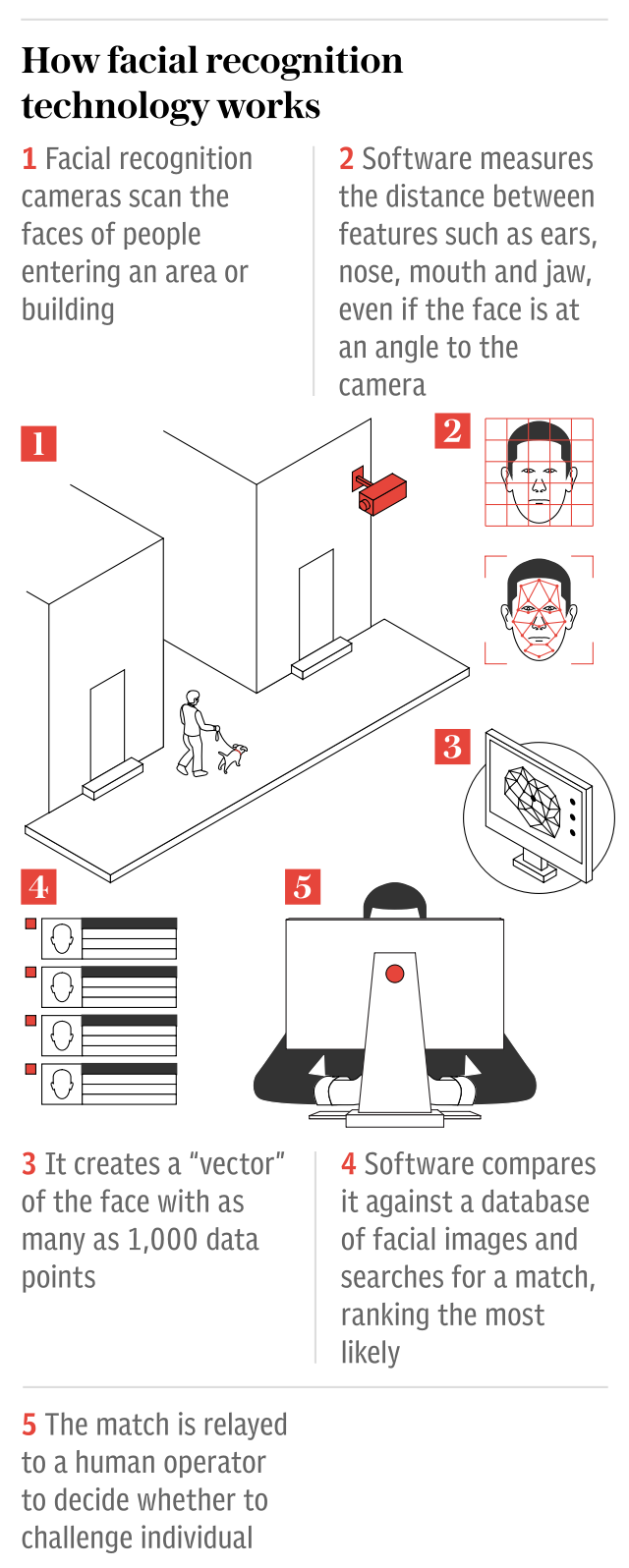

The Court of Appeal said it had made the decision as there was no clear guidance on where the police's live facial recognition technology, which compares live CCTV footage to a watchlist of faces and is called "AFR Locate", could be used and who could be put on the watchlist.

It said the South Wales Police had also not taken reasonable steps to make enquiries over whether the tech was biased against race or gender.

Some changes were needed to regulatory framework for police forces to start using the technology, it said, although the court stopped short of ruling that new legislation was needed.

However, the decision was hailed as a victory by Mr Bridges, who had brought the legal claim against South Wales Police in 2018, arguing his privacy and data protection rights had been violated after his face was scanned whilst shopping.

Previously, the High Court had said this had not been unlawful use of the tech, although it had “deep concerns” over its potential threat to privacy.

On the Court of Appeal ruling, Mr Bridges said: “I’m delighted that the Court has agreed that facial recognition clearly threatens our rights.

“This technology is an intrusive and discriminatory mass surveillance tool. For three years now South Wales Police has been using it against hundreds of thousands of us, without our consent and often without our knowledge. We should all be able to use our public spaces without being subjected to oppressive surveillance.”

Campaign group Liberty, who represented Mr Bridges, said it was now "time for the Government to recognise the serious dangers of this intrusive technology".

However, others claimed that the case actually mapped "the way ahead" for how police forces could use facial recognition in future, rather than dealing it a blow.

Anne Studd QC, of 5 Essex Court Chambers, said the judgment was "a significant one because the Court declined to rule that, in order lawfully to use live AFR, primary legislation needs to be enacted (in order to regulate processing of images in the same way as fingerprints or DNA is processed by the police service)".

"Instead, the Court has identified the relatively modest changes to the policy framework that are needed in order that live AFR can continue to be used."

The case came just months after police in the US faced questions over whether how they were using facial recognition during the Black Lives Matter protests.

It led to companies including Amazon blocking US police from using its facial recognition kit to allow time for legislation in the space to be introduced.

Yahoo News

Yahoo News