Secret internal Facebook documents show just how much users can get away with posting (FB)

David Ramos/Getty Images

Facebook users can get away with posting a wide range of controversial material, according to internal documents obtained and published by The Guardian.

The "Facebook Files" — a selection of more than 100 internal training manuals, spreadsheets, and flowcharts — provide a rare insight into how Facebook's moderation team work and what they look out for.

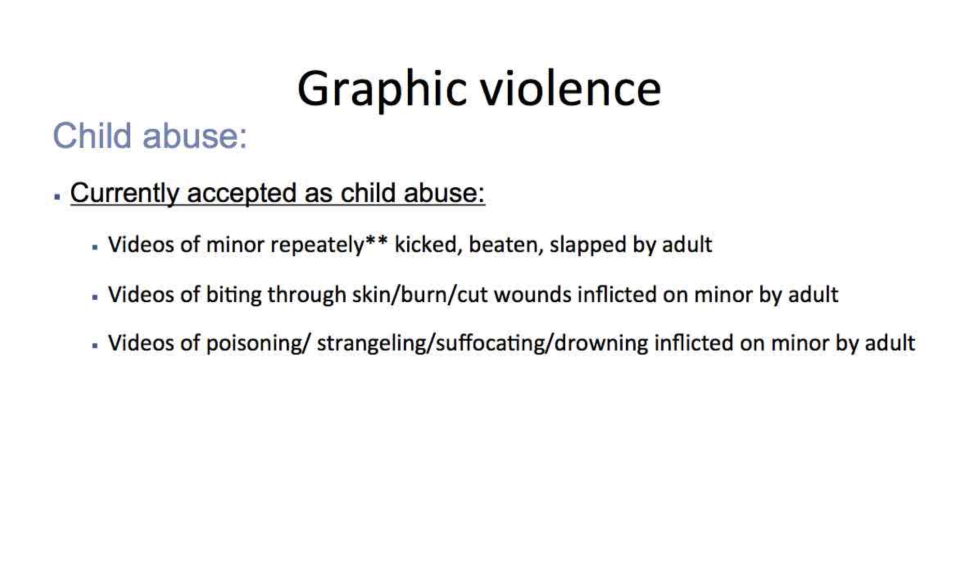

The documents, which show how Facebook is trying to deal with some of the most disturbing content on its platform, reveal that Facebook users are allowed to post:

live-streams of people self harming

suicide attempts

comments like "To snap a bitch’s neck, apply your pressure to the middle of her throat" and "fuck off and die"

videos of violent deaths

certain photos of children being abused or bullied

videos of abortions, providing there is no nudity

photos of animal abuse.

Facebook uses community reporting, human review, and automation to detect inappropriate material on its platform.

When a piece of content is reported, Facebook's army of human and robotic moderators can choose to either take no action, mark content as "disturbing," or remove it entirely.

Facebook allows many postings to remain online to inform its 2 billion users about a number of topics that it deems to be important, including the suffering of innocent people. It also leaves many postings up in a bid to support freedom of expression.

For example, the company writes in its guidelines that "it doesn't want to censor or punish people in distress who are attempting suicide."

Facebook/The Guardian

Facebook/The Guardian

Monika Bickert, head of global policy management at Facebook, said in a statement:

"Keeping people on Facebook safe is the most important thing we do. We work hard to make Facebook as safe as possible while enabling free speech. This requires a lot of thought into detailed and often difficult questions, and getting it right is something we take very seriously. Mark Zuckerberg recently announced that over the next year, we'll be adding 3,000 people to our community operations team around the world — on top of the 4,500 we have today — to review the millions of reports we get every week, and improve the process for doing it quickly.

"In addition to investing in more people, we're also building better tools to keep our community safe. We’re going to make it simpler to report problems to us, faster for our reviewers to determine which posts violate our standards and easier for them to contact law enforcement if someone needs help."

NOW WATCH: 4 terrible things that happen to your body when you run too much

See Also:

Yahoo News

Yahoo News