AI Promised to Upend the 2024 Campaign. It Hasn’t Yet.

Artificial intelligence helped make turnout predictions in the Mississippi elections last year, when one group used the technology to transcribe, summarize and synthesize audio recordings of its door knockers’ interactions with voters into reports on what they were hearing in each county.

Another group recently compared messages translated by humans and AI into six Asian languages and found them all to be similarly effective. A Democratic firm tested four versions of a voice-over ad — two spoken by humans, two by AI — and found that the male AI voice was as persuasive as its human equivalent (the female voice outperformed her AI equivalent).

The era of artificial intelligence has officially arrived on the campaign trail. But the much-anticipated, and feared, technology remains confined to the margins of American campaigns.

Sign up for The Morning newsletter from the New York Times

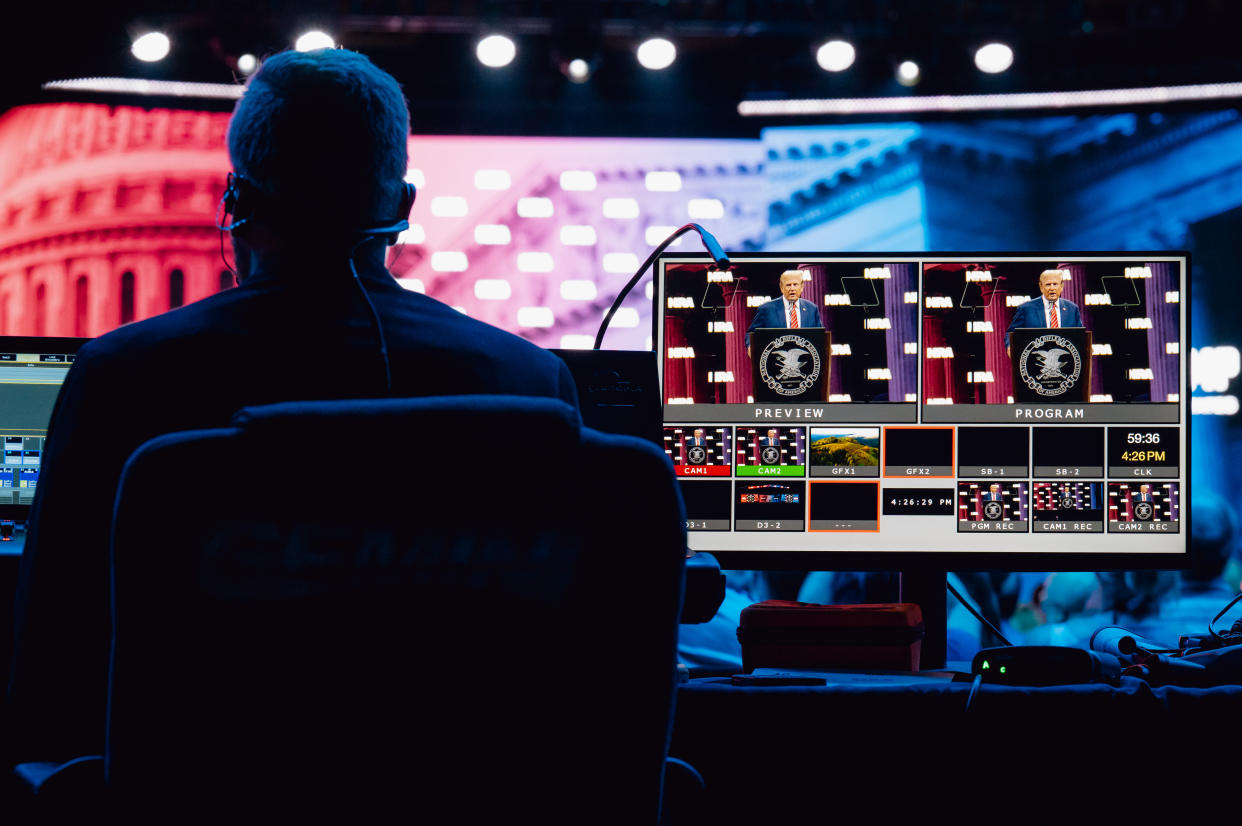

With less than six months until the 2024 election, the political uses of AI are more theoretical than transformational, both as a constructive communications tool or as a way to spread dangerous disinformation. The Biden campaign said it has strictly limited its use of generative AI — which uses prompts to create text, audio or images — to productivity and data-analysis tools, while the Trump campaign said it does not use the technology at all.

“This is the dog that didn’t bark,” said Dmitri Mehlhorn, a political adviser to one of the Democratic Party’s most generous donors, Reid Hoffman. “We haven’t found a cool thing that uses generative AI to invest in to actually win elections this year.”

Hoffman is hardly an AI skeptic. He was previously on the board of OpenAI, and recently sat for an “interview” with an AI version of himself. For now, though, the only political applications of the technology that merit Hoffman’s money and attention are what Mehlhorn called “unsexy productivity tools.”

Eric Wilson, a Republican digital strategist who runs an investment fund for campaign technology, agreed. “AI is changing the way campaigns are run but in the most boring and mundane ways you can imagine,” he said.

Technologists and political operatives have little doubt of AI’s power to transform the political stage — eventually. A new report from Higher Ground Labs, which invests in political technology companies to benefit progressive causes and candidates, found that while the technology remains in “the experimental stage,” it also represents “a generational opportunity” for the Democratic Party to get ahead.

For now, the Democratic National Committee has been experimenting more modestly, such as using AI to spot anomalous patterns in voter registration records and find notable voter removals or additions.

Jeanine Abrams McLean, the president of Fair Count, the nonprofit that led the AI experiment in Mississippi, said the pilot project had involved 120 voice memos recorded after meetings with voters that were transcribed by AI. Then, the team had used the AI tool Claude to map out geographic differences in opinion based on what canvassers said about their interactions.

“Synthesizing the voice memos using this AI model told us the sentiments coming out of Coahoma County were much more active, indicating a plan to vote,” she said. “Whereas we did not hear those same sentiments in Hattiesburg.”

Sure enough, she said, turnout had wound up lower in the Hattiesburg area.

Larry Huynh, who oversaw the AI voice-over ads, said he was surprised at how the AI voices had stacked up. He and most of his colleagues at the Democratic consulting firm Trilogy Interactive had thought the male AI voice sounded “the most stilted.” Yet it proved persuasive, according to testing.

“You don’t have to necessarily have a human voice to have an effective ad,” said Huynh, who as president of the American Association of Political Consultants thinks heavily about the ethics and economics of AI technology. Still, he added, tinkering with models to create a new AI voice had been as work-intensive and costly as hiring a voice actor.

“I don’t believe,” he said, “it actually saved us money.”

Both Democrats and Republicans are also racing to shield themselves against the threat of a new category of political dark arts, featuring AI-fueled disinformation in the form of deepfakes and other false or misleading content. Before the New Hampshire primary in January, an AI-generated robocall that mimicked President Joe Biden’s voice in an attempt to suppress votes led to a new federal rule banning such calls.

For regulators, lawmakers and election administrators, the incident underscored their disadvantages in dealing with even novice mischief-makers, who can move more quickly and anonymously. The fake Biden robocall was made by a magician in New Orleans who holds world records in fork bending and escaping from a straitjacket. He has said he used an off-the-shelf AI product that took him 20 minutes and cost $1.

“What was concerning was the ease with which a random member of the public who really doesn’t have a lot of experience in AI and technology was able to create the call itself,” David Scanlan, New Hampshire’s secretary of state, told a Senate committee hearing on AI’s role in the election this spring.

AI is “like a match on gasoline,” said Rashad Robinson, who helped write the Aspen Institute’s report on information disorder after the 2020 race.

Robinson, the president of Color of Change, a racial-justice group, outlined the kind of “nightmare” scenario he said would be all but impossible to stop. “You can have the voice of a local reverend calling 3,000 people, telling them, ‘Don’t come down to the polls because there are armed white men and I’m fighting for an extra day of voting,’” he said. “The people who are building the tools and platforms that allow this to happen have no real responsibility and no real consequence.”

The prospect of similar eleventh-hour, AI-fueled disruptions is causing Maggie Toulouse Oliver, New Mexico’s secretary of state, to lose sleep. In the run-up to her state’s primary, she has rolled out an ad campaign warning voters that “AI won’t be so obvious this election season” and advising “when in doubt, check it out.”

“So often in elections, we’re behind the eight ball,” she said, adding, “And now we have this new wave of activity to deal with.”

AI has already been used to mislead in campaigns abroad. In India, an AI version of Prime Minister Narendra Modi has addressed voters by name on WhatsApp. In Taiwan, an AI rendering of the outgoing president, Tsai Ing-wen, seemed to promote cryptocurrency investments. In Pakistan and Indonesia, dead or jailed politicians have reemerged as AI avatars to appeal to voters.

So far, most fakes have been easily debunked. But Microsoft’s Threat Analysis Center, which studies disinformation, warned in a recent report that deepfake tools are growing more sophisticated by the day, even if one capable of swaying American elections “has likely not yet entered the marketplace.”

In the 2024 race, many candidates are approaching artificial intelligence warily, if at all.

The Trump campaign “does not engage in or utilize AI,” according to a statement from Steven Cheung, a spokesperson. He said, however, that the campaign does use “a set of proprietary algorithmic tools, like many other campaigns across the country, to help deliver emails more efficiently and prevent sign-up lists from being populated by false information.”

The Trump campaign’s reticence toward AI, however, has not stopped his supporters from using the technology to craft deepfake images of the former president surrounded by Black voters, a constituency he is aggressively courting.

The Biden campaign said it has strictly limited its use of AI. “Currently, the only authorized campaign use of generative AI is for productivity tools, such as data analysis and industry-standard coding assistants,” said Mia Ehrenberg, a campaign spokesperson.

A senior Biden official, granted anonymity to speak about internal operations, said AI is deployed most often in the campaign to find behind-the-scenes efficiencies, such as testing which marketing messages lead to clicks and other forms of engagement, a process known as conversation marketing. “Not the stuff of science fiction,” the official added.

Artificial intelligence occupies such a central spot in the zeitgeist that some campaigns have found that just deploying the technology helps draw attention to their messaging.

After the National Republican Congressional Committee showed AI-generated images of national parks as migrant tent cities last year, a wave of news coverage followed. In response to a recording released by the former president’s daughter-in-law, Lara Trump (her song was called “Anything is Possible”), the Democratic National Committee used AI to create a dis track mocking her and GOP fundraising, earning the attention of the celebrity gossip site TMZ.

Digital political strategists, however, are still feeling out how well AI tools actually work. While many involve mundane data-crunching efforts, some involve novel ideas, such as an AI-powered tool to prevent the person in a video from breaking eye contact, which could streamline the recording of scripted videos. With the White House blocking the release of audio from Biden’s interview with a special counsel, Republicans could instead use an AI-generated track of Biden reading the transcript for dramatic effect.

“I don’t know a single person who hasn’t tried prewriting their content,” Kenneth Pennington, a Democratic digital strategist, said of using generative AI to write early drafts of fundraising messages. “But I also don’t know many people who felt the process was serviceable.”

In Pennsylvania, one congressional candidate used an AI-powered phone banking service to conduct interactive phone conversations with thousands of voters.

“I share everyone’s grave concerns about the possible nefarious uses of AI in politics and elsewhere,” the candidate, Shamaine Daniels, said on Facebook. “But we need to also understand and embrace the opportunities this technology represents.”

She finished the contest in a distant third place.

c.2024 The New York Times Company

Yahoo News

Yahoo News