You can now control an iPhone or iPad with your eyes with Apple’s new features

They’re putting the “eye” in iPhone.

Apple users will soon be able to control their iPhones and iPads with their eyes after the tech giant unveiled an array of new accessibility features for their products.

“Eye Tracking,” which is designed for users with physical disabilities, will be powered by Artificial Intelligence and won’t require any additional hardware.

According to an announcement by Apple on Wednesday, the feature will use the front-facing camera of an iPhone or iPad to trace a user’s eye movement as they navigate “through elements of an app.”

“We believe deeply in the transformative power of innovation to enrich lives,” CEO Tim Cook declared in the media release.

“We’re continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users.”

Cook didn’t disclose exactly when the Eye Tracking feature will be available, but is “likely to debut in iOS and iPadOS” which will be released later this year, per The Verge.

The accessibility feature is one of several soon set to come to iPhones and iPads.

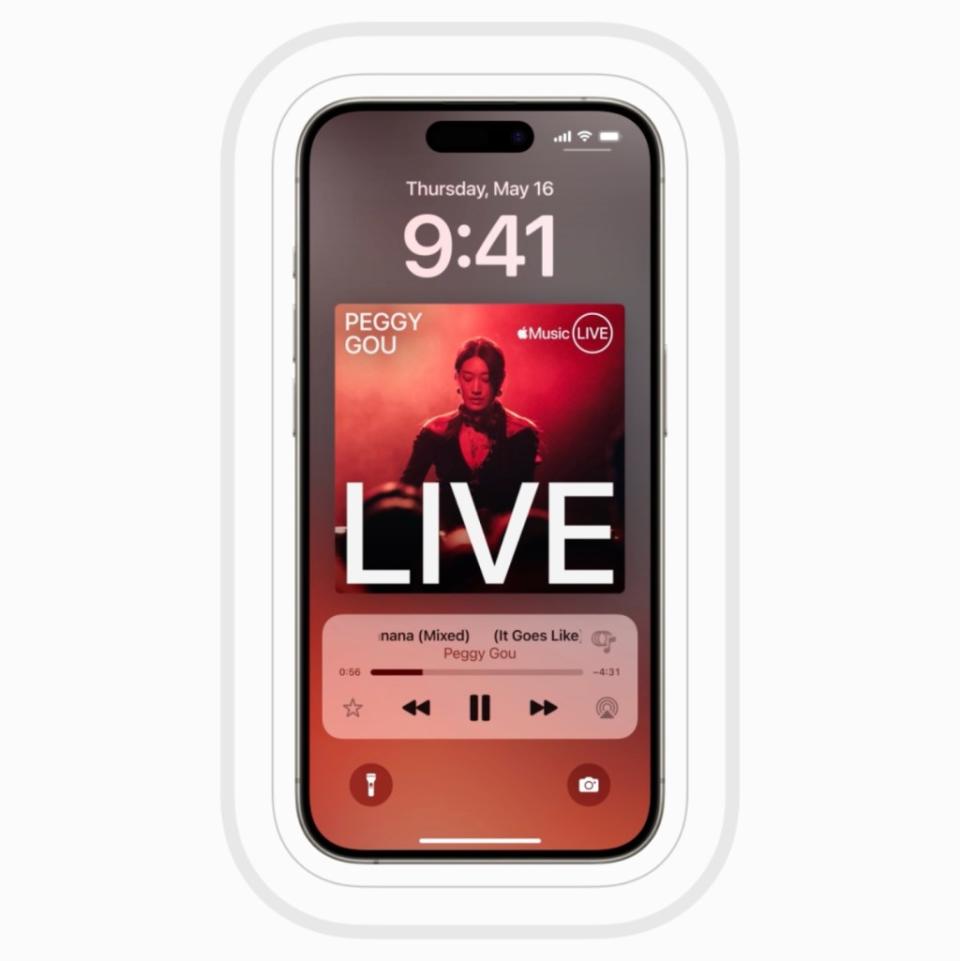

The tech giant also announced a Music Haptics feature, which will allow users who are deaf or hard of hearing to experience music through taps, textures and refined vibrations matched to audio.

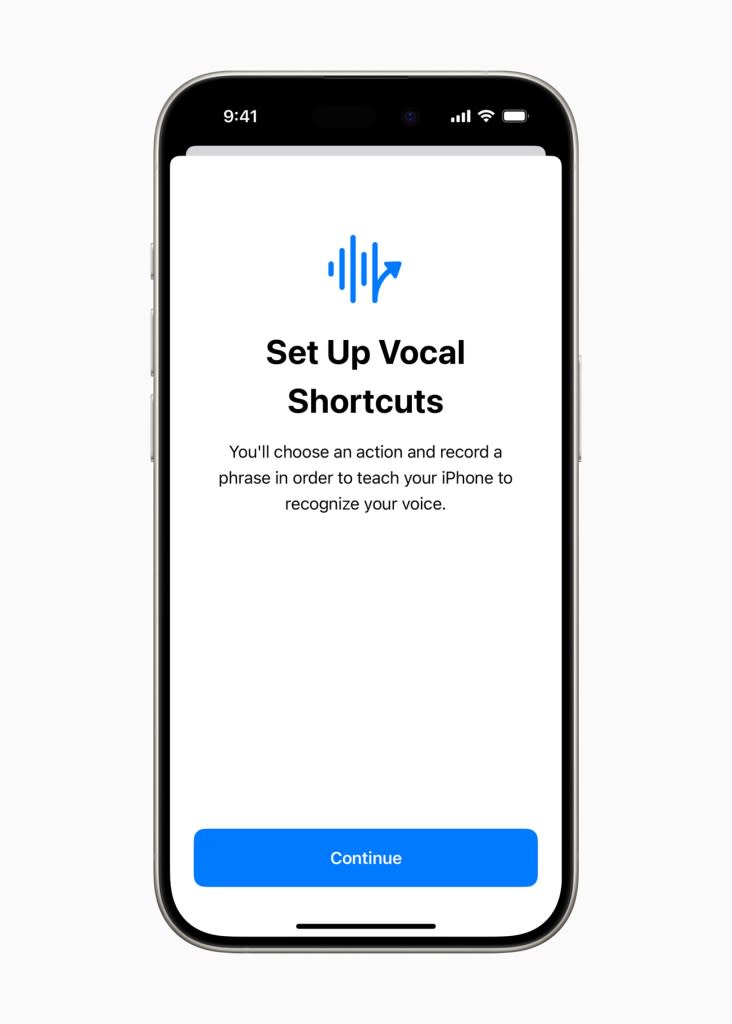

Meanwhile, an Atypical Speech feature will be available for those who suffer from speech impairments.

Using Artificial Intelligence, the feature will recognize unique user speech patterns and translate them into instructions that Siri can understand.

“Artificial intelligence has the potential to improve speech recognition for millions of people with atypical speech, so we are thrilled that Apple is bringing these new accessibility features to consumers,” Professor Mark Hasegawa-Johnson from the University of Illinois Urbana-Champaign declared in the company’s release.

Yahoo News

Yahoo News