Reading your mind: This AI system can translate thoughts to text

Artificial intelligence has been shocking and inspiring millions of people in recent months with its ability to write like a human, create stunning images and videos, and even produce songs that are shaking up the music industry.

Now, researchers have revealed another potential application that could have huge implications - AI that can essentially read minds.

They have been working on a new AI system that can translate someone’s brain activity into a continuous stream of text.

Called a semantic decoder, the system measures brain activity with an fMRI (functional magnetic resonance imaging) scanner, and can generate text from brain activity alone.

The researchers behind the work, from the University of Texas in Austin, say the AI could one day help people who are mentally conscious but unable to physically speak, such as people who have suffered severe strokes.

“For a noninvasive method, this is a real leap forward compared to what’s been done before, which is typically single words or short sentences,” said Alex Huth, an assistant professor of neuroscience and computer science at UT Austin, and one of the authors of the paper.

“We’re getting the model to decode continuous language for extended periods of time with complicated ideas”.

There are other language decoding systems in development, but these require subjects to have surgical implants, which are classed as invasive systems.

The semantic decoder is a non-invasive system, as no implants are required. Unlike other decoders, subjects are also not restricted to using words from a prescribed list. The decoder is trained extensively with fMRI scans taken while the subject listens to hours of podcasts. After that, the subject listens to a new story, or imagines telling a story, and their brain activity generates the corresponding text.

AI and the future of work: Here are the fastest-growing jobs - and those set to decline

‘Godfather of AI’ Geoffrey Hinton quits Google to warn over the tech’s threat to humanity

Decoding ‘the gist’ of the thought

The researchers explained the results are not a word-for-word transcript of what the subject hears or says in their mind, but the decoder catches “the gist” of what is being thought. Around half of the time the machine is capable of producing text that closely - and sometimes precisely - matches the intended meanings of the original words.

In one experiment for example, a listener heard a speaker say: “I don’t have my driver’s licence yet,” and the machine translated their thoughts to: “She has not even started to learn to drive yet”.

During testing, the researchers also asked subjects to watch short silent videos while being scanned, and the decoder was able to use their brain activity to accurately describe some events from the videos.

The researchers believe the process could be transferred to more portable brain-imaging systems such as functional near-infrared spectroscopy (fNIRS). It is not practical for wider use currently because of its reliance on fMRI machines.

“fNIRS measures where there’s more or less blood flow in the brain at different points in time, which, it turns out, is exactly the same kind of signal that fMRI is measuring,” Huth said. “So, our exact kind of approach should translate to fNIRS,” although, he noted, the resolution with fNIRS would be lower.

The researchers addressed potential concerns regarding the use of this sort of technology, with lead author Jerry Tang, a doctoral student in computer science, saying: “We take very seriously the concerns that it could be used for bad purposes and have worked to avoid that”.

They insist the system cannot be used on anyone against their will, as it needs to be extensively trained on a willing participant. “A person needs to spend up to 15 hours lying in an MRI scanner, being perfectly still, and paying good attention to stories that they’re listening to before this really works well on them,” explained Huth.

The findings were published in the journal Nature.

Decoding images from the mind

Translating brain activity to written words isn’t the only mind-reading researchers are testing artificial intelligence on.

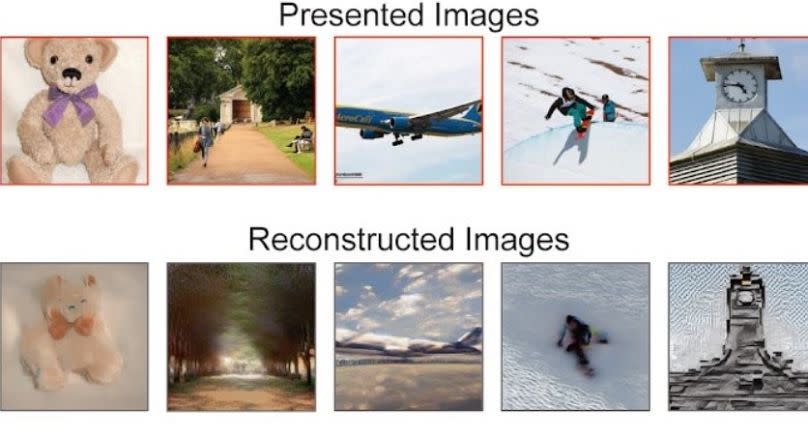

Another recent study, published in March, revealed how AI can read brain scans to recreate images a person has seen.

The researchers at Osaka University in Japan used Stable Diffusion - a text-to-image generator similar to Midjourney and OpenAI’s DALL-E 2.

That system was capable of reconstructing visual experiences from human brain activity, thanks again to fMRI brain scans taken while a subject looked at a visual cue.

Yahoo News

Yahoo News