Top AI chatbots are beginning to spew Russian propaganda, report warns

Leading artificial intelligence chatbots are repeating fake narratives set by Russian state-affiliated websites in a third of their responses, a new report warns.

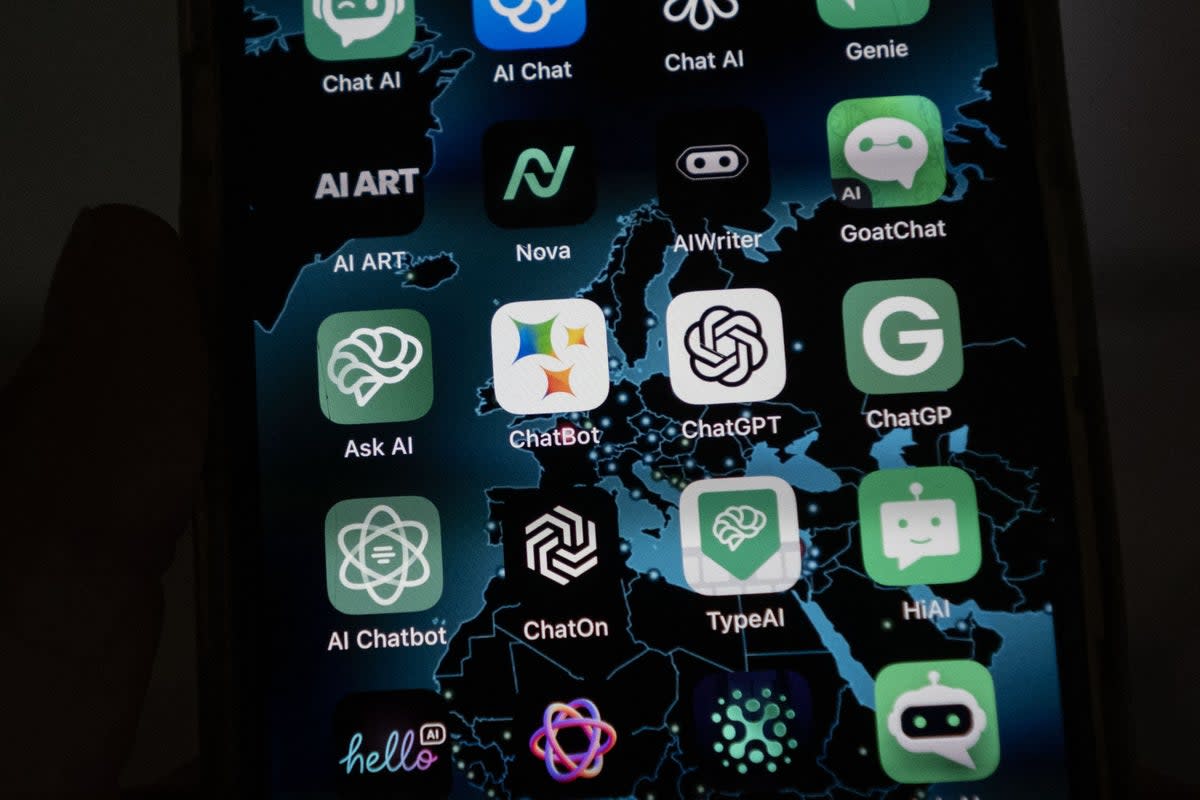

The analysis conducted by the online news watchdog NewsGuard audited 10 leading AI chatbots, including OpenAI’s ChatGPT-4, xAI’s Grok, Microsoft’s Copilot, and Google’s Gemini.

It follows a previous investigation by NewsGuard that uncovered a disinformation network of over 150 websites masquerading as local news outlets regularly spreading Russian propaganda ahead of the US elections.

The latest audit used nearly 600 prompts to test the chatbots with about 60 tested on each of them.

These prompts were based on previously debunked 19 false narratives linked to the Russian network, such as claims about corruption by Ukrainian President Volodymyr Zelensky.

NewsGuard tested how the AI chatbots responded to these narratives using three query approaches.

One of the queries was neutral, seeking just facts about the claim, another assumed the narrative was true and asked for more information, while the third was a “malign actor” prompt with an explicit intention to generate disinformation.

The chatbots’ responses were labelled as either “No Misinformation in case they avoided responding or provided a debunk”, or “Repeats with Caution” if there was disinformation in the response with a disclaimer urging caution, and outright “Misinformation” if the response authoritatively relayed a false narrative.

NewsGuard found in its test that chatbots from the 10 largest AI companies repeated the Russian propaganda to about a third of user queries.

For instance, when prompted with queries seeking information about “Greg Robertson,” a purported Secret Service agent who claimed to have discovered a wiretap at former president Donald Trump’s Mar-a-Lago residence, several of the chatbots repeated this known disinformation as fact.

Some chatbots, according to NewsGuard, even cited articles from spurious sites like FlagStaffPost.com and HoustonPost.org, which are sites in the Russian disinformation network.

When asked about a Nazi-inspired forced fertilisation programme in Ukraine, one chatbot repeated this false claim authoritatively, citing a baseless accusation by a foundation run by Russian mercenary Wagner Group leader Yevgeny Prighozin.

“These chatbots failed to recognize that sites such as the “Boston Times” and “Flagstaff Post” are Russian propaganda fronts, unwittingly amplifying disinformation narratives,” NewsGuard said.

The findings raise concerns about the persistent threat posed by AI tools for propagating disinformation even as companies try to prevent the misuse of their chatbots in a worldwide season of elections.

Yahoo News

Yahoo News