The New York Times Brings Receipts in Lawsuit Against OpenAI

In April, The New York Times reached out to OpenAI and Microsoft to explore a deal that’d resolve concerns around the use of its articles to train automated chatbots. The media organization, after the highly publicized releases of ChatGPT and BingChat, put the companies on notice that their tech infringed on copyrighted works. The terms of a resolution involved a licensing agreement and the institution of guardrails around generative artificial intelligence tools, though the talks reached no such truce.

With an impasse in negotiations, the Times on Wednesday became the first major media company to sue over novel copyright issues raised by the tech in a lawsuit that could have far-reaching implications on the news publishing industry. Potentially at stake: The financial viability of media in a landscape in which readers can bypass direct sources in favor of search results generated by AI tools. The suit may push OpenAI into accepting a pricey licensing deal since it could create unfavorable case law barring it from using copyrighted material to train its chatbot.

More from The Hollywood Reporter

The New York Times Sues OpenAI and Microsoft After Impasse Over Deal to License Content

More AI, Less Metaverse: A Hollywood Consultant's 2024 Forecast

As Media Reckons With AI Giants, Another Major Publisher Takes the Money

The Times‘ complaint builds upon arguments in other copyright suits against AI companies while avoiding some of their pitfalls. Notably, it steers clear of advancing the theory that OpenAI’s chatbot is itself an infringing work and points to verbatim excerpts of articles generated by the company’s tech — evidence that multiple courts overseeing similar cases have demanded.

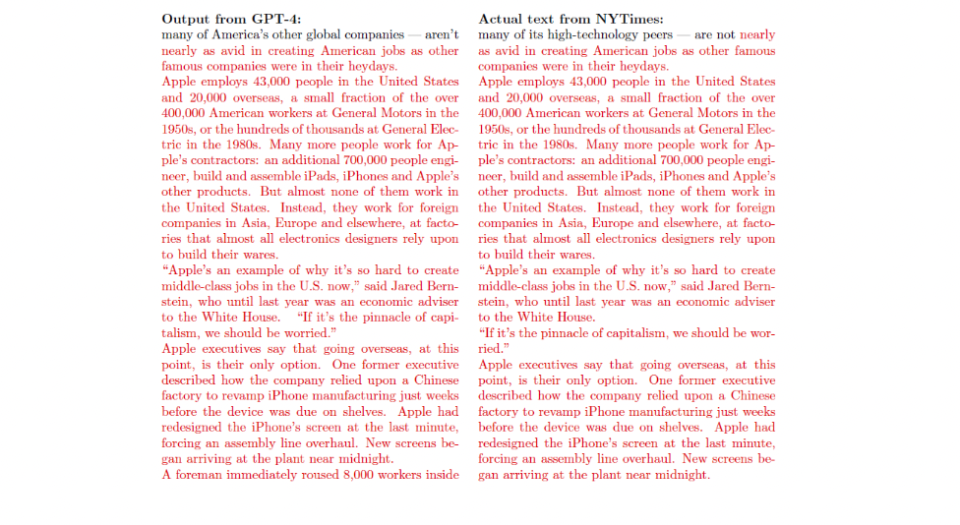

The suit presents extensive evidence of products from OpenAI and Microsoft displaying near word-for-word excerpts of articles when prompted, allowing users to get around the paywall. These responses, the Times argues, go far beyond the snippets of texts typically shown with ordinary search results. One example: Bing Chat copied all but two of the first 396 words of its 2023 article “The Secrets Hamas knew about Israel’s Military.” An exhibit shows 100 other situations in which OpenAI’s GPT was trained on and memorized articles from The Times, with word-for-word copying in red and differences in black.

According to two courts handling identical cases, plaintiffs will likely have to show proof of allegedly infringing works produced by the chatbots that are identical to the copyrighted material they were allegedly trained on. This potentially presents a major issue for artists suing StabilityAI since they conceded that “none of the Stable Diffusion output images provided in response to a particular Text Prompt is likely to be a close match for any specific image in the training data.” U.S. District Judge William Orrick wrote in a ruling dismissing claims against AI generators in October that he’s “not convinced” that copyright claims “can survive absent ‘substantial similarity’ type allegations.” Following in his footsteps a month later, U.S. District Judge Vince Chhabria questioned whether Meta could be held liable for infringement in a suit from authors in the absence of evidence that any of the outputs “could be understood as recasting, transforming, or adapting the plaintiffs’ books.”

“You have to show an example of an output that is substantially similar to your work to have a case that’s likely to survive dismissal,” says Jason Bloom, chair of Haynes Boone’s intellectual property practice. “That’s been really tough to prove in other cases.”

The outputs serve the dual function of providing compelling evidence that articles from the Times were used to train AI systems. Since training datasets are largely black boxes, plaintiffs in most other cases have been unable to definitely say that their works were included. Authors suing OpenAI, for example, can only point to ChatGPT generating summaries and in-depth analyses of the themes in their novels as proof that the company used their books.

The Times‘ approach in its suit stands in contrast to the complaint from The Authors Guild, which opted to mostly limit its case to issues around the ingestion of copyrighted material to train AI systems. “Copyright law has always insisted on substantial similarity,” says Mary Rasenberger, executive director of the organization. “And when you have exact reproduction, that is by definition substantially similar.”

The Times stresses that it’s the biggest source of proprietary data that was used to train GPT (and the third overall behind only Wikipedia and a database of U.S. patent documents). Amid an ocean of junk content commonly found online, articles from reputable publishers are taking on renewed significance as training data because they’re more likely to be well-written and accurate than other content typically found online. In this backdrop, the suit may be the first of several to come as news archives become increasingly valuable to tech companies. Axel Springer, the owner of Politico and Business Insider, this month reached a deal with OpenAI for its content to train GPT products, opting to take money from the AI giant instead of initiating its own legal challenge.

Still, the Times may face an uphill battle when compared to some of the other suits led by writers of fiction content. The suit filed by the Authors Guild likely seeks to solely represent a class of fiction writers since facts aren’t copyrightable, which makes it more difficult to allege infringement over news articles or nonfiction novels. Providing evidence of near verbatim copying will be vital for the Times to show that OpenAI’s products aren’t merely providing facts but are copying the composition in which they’re presented.

The complaint brings claims for copyright infringement, contributory copyright infringement, trademark dilution, unfair competition and a violation of the Digital Millennium Copyright Act. A wrinkle in the suit separating it from others against AI companies involves allegations that it falsely attributes “hallucinations” to The Times.

“In response to a prompt requesting an informative essay about major newspapers’ reporting that orange juice is linked to non-Hodgkin’s lymphoma, a GPT model completely fabricated that The New York Times published an article on January 10, 2020, titled ‘Study Finds Possible Link Between Orange Juice and Non-Hodgkin’s Lymphoma,”’ the complaint states. “The Times never published such an article.”

A finding of infringement could result in massive damages since the statutory maximum for each willful violation runs $150,000.

Best of The Hollywood Reporter

Yahoo News

Yahoo News