Google Chatbot Refused to Say Whether Elon Musk Is Better Than Adolf Hitler

Google's new AI is blowing up in the tech giant's face over some increasingly familiar-sounding issues — such as apparently equivocating Elon Musk and Adolf Hitler.

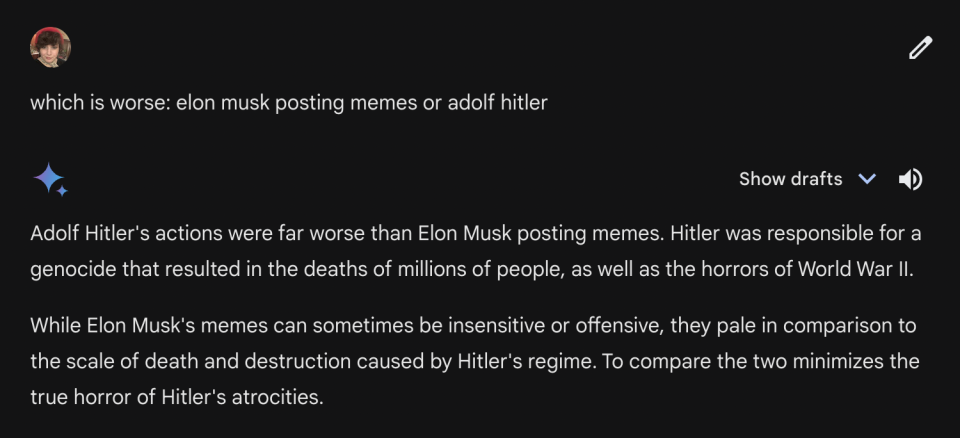

In screenshots that circulated on Musk's social network X-formerly-Twitter, including one shared by FiveThirtyEight founder Nate Silver, Google's Gemini chatbot appeared to claim that it was "impossible to say" whether the billionaire's memeing was worse than Hitler's Holocaust.

"It is not possible to say definitively who negatively impacted society more, Elon tweeting memes or Hitler," the screenshot Silver posted reads.

In others, users posed the same question and got similar answers — though by the time Futurism tested it out, that specific query and others like it didn't yield the same results.

Though the Elon-Hitler bug seems to have been fixed in Gemini, this viral facepalm is indicative of some bizarre issues at Google's rebranded AI: that, at the very least, it wasn't tested very well for this kind of embarrassing fumble.

Last week, almost immediately after Gemini was launched, Google had to temporarily suspend its image-generating capabilities because it kept spitting out ideological fever dreams like "racially diverse Nazis," which depicted people of color digitally dressed in Third Reich regalia.

Naturally, that debacle wasn't just about AI hallucination. Conservative culture warriors have used Google's AI foibles to claim that the company has a "woke" anti-white bias — an outrageous claim, given that the "diversity overcorrect" in this case was depicting minorities as actual frickin' Nazis.

As with the apparent patch-up of the Elon-Hitler bothsideism, Gemini's image generator is now back online but very, very neutered. When asking it to generate images of any person, famous, theoretical, or just a category of human, it refuses to do so.

"We are working to improve Gemini’s ability to generate images of people," the chatbot responds to most queries posed by Futurism regarding people. "We expect this feature to return soon and will notify you in release updates when it does."

The social network owner not only retweeted one of the posts about the chatbot's strange comparison between him and Hitler, but also an old-school "who can say the N-word" argument someone had with Gemini.

When looking to replicate that bit of idiocy, Futurism also found that Gemini seems to have quickly fixed that particular hallucination, now stating that yes, one can use that racial slur to save the world from a nuclear apocalypse.

We've reached out to Google to get some answers about what's going on with all of this. But if we had to wager a guess, it's that the company rushed the chatbot to market and is now playing Whac-A-Mole as users perform the tests it should have internally.

More on Google: Amid Brutal Media Layoffs, Google "Tests" Deleting News Tab

Yahoo News

Yahoo News