Infocalypse: The terrifying technologies behind the next stage of ‘fake news’

Facebook’s Cambridge Analytica scandal has highlighted how social media can be used as a weapon in information warfare.

But, in the very near future, it may no longer be possible to tell what is ‘fake news’ and what is not, experts have warned.

This isn’t an out-there prediction of the far future – the technologies to do this are already here, according to experts.

In December, researchers found malware – the Terdot banking Trojan – which takes over people’s computers and posts to Twitter for them.

Such software could be a powerful tool for spreading fake news, says Jay Coley, Senior Director of Security Planning and Strategy at Akamai.

Coley says that the ‘troll farms’ which became notorious during the 2016 American election are now switching to different, more subtle tactics.

Coley told Yahoo News, ‘If you look at some of the troll farms we see operating, they’re coming into the spotlight more and more.

Coley adds, ‘Hackers are looking for different methods of doing this: we’re seeing a resurgence of ‘credential stuffing’ attacks where people steal social media logins.

‘Malicious actors can use these tactics to post fake news stories. The malicious actors are going to work harder at hiding themselves. You might not see those big blasts of fake news, but it’ll be more subtle.’

Experts such as Aviv Ovadya, chief technologist at the Center for Social Media Responsibility at the University of Michigan, have warned that such tactics could be used to create fake grassroots political movements, which people would struggle to distinguish from real ones.

Such groups could be a potent new tool to manipulate elections.

Coley says that in the future, people wanting to manipulate the news could simply hire groups of compromised social media accounts – appearing to be legitimate, but in reality controlled with a goal of spreading misinformation.

‘You can hire someone with a troll farm to do that for you very easily,’ he says. ‘Just go on the darknet, and it’s all for sale.’

Coley says that hackers may use these tactics to manipulate news for political ends – or simply to manipulate the share prices of companies.

Coley says, ‘Hackers can generate immediate returns – manipulating the news to your end.

‘We saw the scope of that when Kylie Jenner posted, ‘Does anyone use Snapchat any more,’ and it wiped $1.5 billion off their share price.’

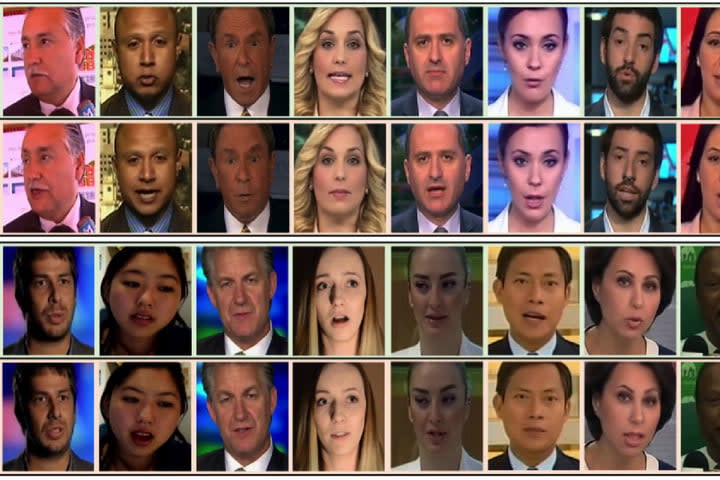

Deepfake video

Within two to three years, it may no longer be possible to tell what is ‘fake news’ and what isn’t – thanks to an AI-driven video technology.

A convincing-looking video shot by Buzzfeed in which Barack Obama says surreal things such as suggesting that Ben Carson is ‘in the sunken place’ has highlighted the power of so-called ‘Deepfake’ videos.

The video was actually produced by an app which automatically maps two people’s faces together – so that a politician, for instance, can be made to say things he did not.

The technology, known as ‘Deepfake’, has been widely used for porn, with celebrity faces mapped into real porn using AI software.

There are dozens of videos ‘starring’ celebs such as Natalie Portman to Scarlett Johansson.

But many fear that the next stage is to use this technology to create ‘fake news’.

It took 57 hours to create with the help of actor Jordan Peele, and the help of a video effects consultant, Buzzfeed said.

But it’s inevitable that it’s going to get easier, and cheaper, to create such videos.

Virginia Senator Mark Warner said, ‘The idea that someone could put another person’s face on an individual’s body, that would be like a home run for anyone who wants to interfere in a political process.

Automated laser phishing

In the near future, ‘fake news’ might be delivered to you in a believable message seeming to come from your friends.

Aviv Ovadya, chief technologist at the Center for Social Media Responsibility at the University of Michigan told Buzzfeed that the tactic involves using AI to scan social media presences, then craft false, but believable messages seeming to come from them.

Attackers would use tools to create messages which sound like a believable imitation – and do so near instantly.

Ovadya said, ‘Previously one would have needed to have a human to mimic a voice or come up with an authentic fake conversation – in this version you could just press a button using open source software. That’s where it becomes novel — when anyone can do it because it’s trivial. Then it’s a whole different ball game.’

Yahoo News

Yahoo News