Google wants to make spotting AI-generated images easier

A picture is worth a thousand words, but sometimes those words can be thoroughly misleading. Uncannily realistic images can be generated by artificial intelligence (AI) through applications like Midjourney and without clear labelling, people can easily believe the evidence of their own eyes, even if it’s complete fiction.

Google, which has its own big plans for generative AI over the coming months, has announced two features to help people establish which pictures are real and which are algorithmically generated.

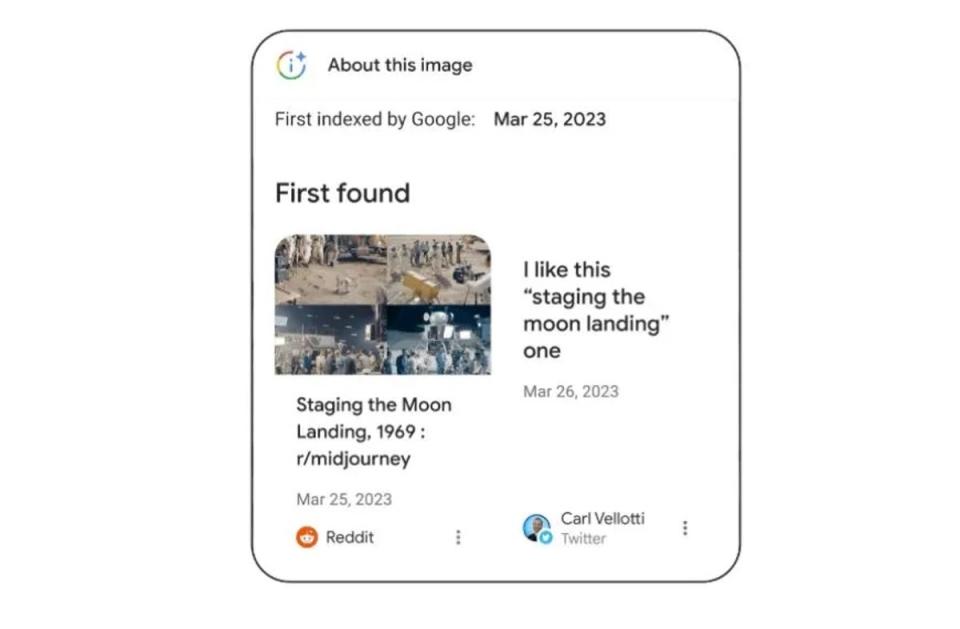

Coming in the next few months and initially rolling out in the US, the first is a tool called “About this image”. Accessible via the three dots next to a picture in Google Image Search, searching via pictures in Google Lens or by swiping up in the Google App, Google will provide key information such as:

When the image and similar pictures were first indexed by Google (to give you an idea of whether it’s new or not)

Where it first appeared online (because a reputable news site is more reliable than 4chan)

Where else it’s been published (fact-checking sites, social media, or news organisations)

With this information, users can get a better idea of where the image originated and how it spread. Did it come from the BBC or a partisan Facebook page? Is the context in which it’s used elsewhere completely different to the description you’ve seen it with? Google hopes this additional context will better inform users.

With Google ramping up its own generative-image capabilities, the company has also pledged to add markups to the original files, clearly marking them as AI-generated. The company says it has buy-in from Midjourney and Shutterstock on this, with others set to follow.

In a blog post accompanying the announcement, Google pointed to a 2022 Poynter study saying that 62 per cent of people think they come across misinformation on at least a weekly basis. “That’s why we continue to build easy-to-use tools and features on Google Search to help you spot misinformation online, quickly evaluate content, and better understand the context of what you’re seeing,” explains Cory Dunton, product manager of Search at Google.

Of course, while well-intentioned, both of these features require the user to be intellectually curious enough to look beyond the picture, not accepting it at face value. Arguably, people with that degree of existing scepticism aren’t really the ones who need to be reached if Google wants to slow the spread of misinformation.

There are plenty of times when a fake or misleading image has gone viral on social media, only for the correction or additional context to get nowhere. This extra contextual information from Google is a step in the right direction, but it’s unlikely to make much of a dent in a problem that’s only going to worsen substantially over the next decade.

Yahoo News

Yahoo News