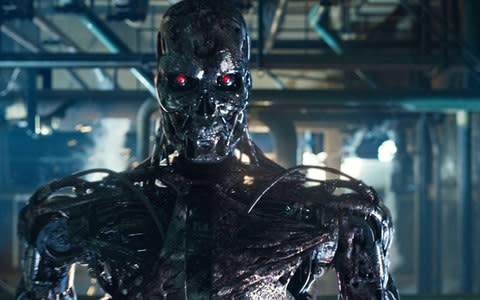

Prepare for rise of 'killer robots' says former defence chief

The rise of military ‘killer robots’ is almost inevitable and any attempt at an international ban will struggle to stop an arms race, according to a former defence chief who was responsible for predicting the future of warfare.

The potential advantages of artificially intelligent war machines that can make decisions, learn and open fire without human control will see countries face growing pressure to adopt the technology, despite ethical misgivings.

Gen Sir Richard Barrons told the Telegraph that a pre-emptive international ban such as the one called for by technology luminaries last week is only likely to be flouted by unscrupulous countries.

The retired senior officer spoke after more than 100 technology leaders wrote an open letter calling on the United Nations to outlaw so-called lethal autonomous weapons.

Campaign groups have also warned the technology will lead to more civilian casualties and abuses.

Sir Richard until last year led the UK’s Joint Forces Command, which has responsibility for preparing for future conflicts. He said the military was facing a revolution based on technology already set to transform the civilian world.

Technology experts predict artificial intelligence will soon be used to make flying drones, armoured vehicles and submarines that can find and recognise targets, make decisions on whether to open fire and and learn as they go.

Even if you don’t plan to have this capability yourself, you are going to have to deal with the fact that machines are going to turn up that are designed to be lethal and there’s no man controlling them at the time

Gen Sir Richard Barrons

In one example, the Russian arms manufacturer Kalashnikov last month announced a machine gun-armed “fully automated combat module” it claimed can identify targets and make decisions on its own.

Elon Musk, the chief of Tesla, and 115 robotics and artificial intelligence experts warned in their letter that robots would lead to wars “at a scale greater than ever, and at timescales faster than humans can comprehend”. “We do not have long to act,” they wrote. “Once this Pandora’s box is opened, it will be hard to close.”

Britain has said its weapons will always maintain human control.

Sir Richard, who retired last year as one of the Army’s most senior generals, said: “If you ask the Ministry of Defence here, they will say as a matter of policy we are not going to do autonomous capability. There will always be a man in the loop. But if you ask other people around the world, they don’t have the same value struggle.

“Even if you don’t plan to have this capability yourself, you are going to have to deal with the fact that machines are going to turn up that are designed to be lethal and there’s no man controlling them at the time.”

Civilian firms building driverless cars and computer networks that can learn are already well ahead of defence giants, robotics experts believe.

“This is bound to come and because militaries will be following the civil sector, this technology is likely to be cheaper than the stuff they have now and therefore more affordable and effective,” Sir Richard said.

While some situations would always need human control,

Automated armed sentries that could guard nuclear reactors, or keep people out of restricted areas such as the demilitarized zone (DMZ) between North and South Korea could be among the first uses, Sir Richard said.

He said machines could replace bored 19-year-olds standing on sentry duty.

“Being 19-year-olds they think about mobile phones and sex as much as they think about the opposition,” he explained. “They get hot, they get cold, so their attention span is shorter and a machine doesn’t blink, doesn’t get hot, doesn’t get cold and just follows the rules. In a defined space like the DMZ it’s as simple as: see something move and shoot at it.”

They would also keep troops out of harm’s way.

“Why would you send a 19-year-old with a rifle into a house first to see if anything is in there if you could send a machine and there are many, many many examples in the land and maritime environments.”

But as they developed, they would also be cheaper and solve some of the West’s military manpower problems.

He said: “The temptation to have them I think will be terrific because they will be more effective, they will be cheaper, they will take people out of harm’s way and will give you bigger armed forces, so the pressure to have them will only grow.

“You find ways of delivering the military output that you want at much cheaper cost. You are buying a machine that is essentially civilian technology bundled into a military role. There you’ve got a machine that doesn't get bored, that doesn’t have to be replaced when it resigns early, that never has a pension, or a hospital. This is a really powerful factor.”

The failure to stop nuclear weapons spreading suggested a ban on the weapons was also likely to fail.

He said: “The global record of having rules which then mean that stuff doesn’t proliferate isn’t terrific.”

Liz Quintana, director of military science at the Royal United Services Institute agreed. She said: “It’s one thing to actually agree on a ban and it’s another to implement it.

“The rate of advancing technology and benefits from the technology are such in all sectors that it will be difficult to enforce.”

Yahoo News

Yahoo News